“The power goes out, our generator kicks in, the camera comes back online, and then the forest is on fire.”

John X. Wang

John X. Wang is Director-Principal Software Engineer - Functional Safety Subject Matter Expert (SME) at Capgemini. Dr. Wang authored/coauthored numerous books and papers on reliability engineering, risk engineering, engineering decision making under uncertainty, robust design and Six Sigma, lean manufacturing, green electronics manufacturing, cellular manufacturing, and industrial design engineering - inventive problem solving. His book was featured as ISE Magazine May 2017 Book of the Month.

Subjects: Animation, Business & Management, Computer Science & Engineering, Energy & Clean Technology, Engineering - Civil, Engineering - Electrical, Engineering - Environmental, Engineering - General, Engineering - Industrial & Manufacturing, Engineering - Mechanical, Engineering - Mining, Environmental Science, Nanoscience & Technology, Statistics

Biography

John X. Wang is Senior Principal Functional Safety Engineer at Flex, where he is responsible for functional safety activities for products being developed by the global engineering team. Dr. Wang has served as Senior Principal Safety Engineer (Member of Product Safety Board) at Cobham Mission Systems, where he twice received Nomination as Employee of the Month Awards and Received Nomination of C.A.R.E (Certified, Ambassadors, Responsive, Exceptional) Award.Dr. Wang received PhD degree in reliability engineering from University of Maryland, College Park, MD, in 1995. He was then with the GE Transportation as an Engineering Six Sigma Black Belt, leading Design for Reliability (DFR) and Design for Six Sigma (DFSS) while teaching GE-Gannon University’s Graduate Co-Op programs and National Technological University professional short course, and serving as a member of the IEEE Reliability Society Risk Management Committee. He has worked as a Corporate Master Black Belt at Visteon Corporation, Reliability Engineering Manager at Whirlpool Corporation, Senior Principal Functional Safety Engineer at Panduit Corp. and Collins Aerospace. In 2009, Dr. Wang received an Individual Achievement Award when working as a Senior Principal Functional Safety Engineer at Raytheon Company. He joined GE Aviation Systems in 2010, where he was awarded the distinguished title of Senior Principal Functional Safety Engineer (CTH - Controlled Title Holder) in 2013. Dr. Wang has been a Group Engineer (Senior Principal Functional Safety Engineer), leading computer vision programs and robotics development at Danfoss Power Solutions, where his work on autonomous vehicle DAVIS was celebrated.

As a Certified Reliability Engineer certified by American Society for Quality, Dr. Wang has authored/coauthored numerous books and papers on reliability engineering, risk engineering, engineering decision making under uncertainty, robust design and Six Sigma, lean manufacturing, green electronics manufacturing, cellular manufacturing, & industrial design engineering. He has been affiliated with Austrian Aerospace Agency/European Space Agency, Vienna University of Technology, Swiss Federal Institute of Technology in Zurich, Paul Scherrer Institute in Switzerland, and Tsinghua University in China.

Having presented various professional short courses and seminars, Dr. Wang has performed joint research with the Delft University of Technology in the Netherlands and the Norwegian Institute of Technology.

Since "knowledge, expertise, and scientific results are well known internationally," Dr. Wang has been invited to present at various national & international engineering events.

Education

-

Ph.D., Reliability Engineering

Areas of Research / Professional Expertise

-

Risk Engineering, Reliability Engineering, Lean Six Sigma, Decision Making Under Uncertainty, Business Communication, Green Electronics Manufacturing, Cellular Manufacturing and industrial design engineering.

Personal Interests

-

Poetry - having authored published poems.

Websites

Books

Articles

A Single Line Opens the Way: Inventive Problem Solving

Published: Jan 03, 2017 by Association of Manufacturing Excellence (AME) Target Online

Authors: John X. Wang

Subjects:

Engineering - General, Engineering - Industrial & Manufacturing

Poetic thinking is a life-cherishing force, a game-changing accelerator for Inventive Problem Solving of Industrial Design Engineering. One line or a few lines within a poem can upend your way of designing industrial products. It can help you recover and reclaim a way of designing products break up acceptance of the status-quo, or, put more fiercely, a single line can tear down a pre-fabricated understanding of your customers.

Achieve robust designs with Six Sigma

Published: May 20, 2010 by EETimes

Authors: John X. Wang

Developing "best-in-class" robust designs is crucial for creating competitive advantages. Customers want their products to be dependable--"plug-and-play." They also expect them to be reliable--"last a long time." Furthermore, customers are cost-sensible; they anticipate that products will be affordable. Becoming robust means seeking win–win solutions for productivity and quality improvement. So far, robust design has been a "road less traveled."

Achieving Robust Designs with Six Sigma: Dependable, Reliable, and Affordable

Published: Oct 06, 2005 by informIT

Authors: John X. Wang

Subjects:

Engineering - General

Developing "best-in-class" robust designs is crucial for creating competitive advantages. Customers want their products to be dependable—"plug-and-play." They also expect them to be reliable—"last a long time." Furthermore, customers are cost-sensible; they anticipate that products will be affordable. Becoming robust means seeking win–win solutions for productivity and quality improvement.

COMPLEXITY AS A MEASURE OF THE DIFFICULTY OF SYSTEM DIAGNOSIS

Published: Mar 01, 1996 by International Journal of General Systems

Authors: John X. Wang

Subjects:

Engineering - General

Complexity as a measure of the difficulty of diagnosis, or troubleshooting, of a system is explored in this paper. It is found in this paper that the system structure has an effect on system complexity as well as the number of components which make up the system. This complexity function presents an intrinsic feature of the system and can be used as a measure for system complexity, which is significant to reliability prediction and allocation.

General inspection strategy for fault diagnosis—minimizing the inspection costs

Published: Mar 01, 1995 by Reliability Engineering & System Safety

Authors: Rune Reinertsen, John X. Wang

Subjects:

Engineering - General

In this paper, a general inspection strategy for system fault diagnosis is presented. This general strategy provides the optimal inspection procedure when the inspections require unequal effort and the minimum cut set probabilities are unequal.

Time-dependent logic for goal-oriented dynamic-system analysis

Published: Jan 19, 1995 by Reliability and Maintainability Symposium, 1995. Proceedings., Annual

Authors: Marvin L. Roush, John X. Wang

Subjects:

Engineering - General

This paper highlights an error that can arise in analyzing accident scenarios when time dependence is ignored. A simple straight-forward solution is provided without the necessity of utilizing more powerful (and hence more complex) dynamic event tree techniques. This possible solution is a straightforward extension of the current fault-tree/event-tree approach by incorporating a time-dependent algebraic formalism into fault-tree/event-tree analysis.

Optimal inspection sequence in fault diagnosis

Published: Mar 01, 1992 by Reliability Engineering & System Safety

Authors: John X. Wang

Subjects:

Engineering - General

The average number of inspections in fault diagnosis to find the actual minimal cutsets (MCS) causing a system failure is found to be dependent on the inspection sequence adopted. An inspection on a component whose Fussell-Vesely importance is nearest to 0·5 leads to the discovery of the actual MCS by a minimum number of inspections.

Fault tree diagnosis based on shannon entropy

Published: Feb 01, 1992 by Reliability Engineering & System Safety

Authors: John X. Wang

A fault tree diagnosis methodology which can locate the actual MCS (minimum cut set) in the system in a minimum number of inspections is presented. The result reveals that, contrary to what is suggested by traditional diagnosis methodology based on probabilistic importance, inspection on a basic event whose Fussell-Vesely importance is nearest to 0·5 best distinguishes the MCSs.

A practical approach for phased mission analysis

Published: Apr 30, 1989 by Reliability Engineering & System Safety

Authors: Xue Dazhi, John X. Wang

This paper presents a new treatment of phased mission problems. Generalized intersection and union concept is developed and provides a pragmatic tool to guide a phased mission analysis. A practical approach to incorporate phased mission analysis into accident sequence quantification, which does not use the basic event transformation, is presented.

Photos

News

Using Arduino to create an isolated flyback converter with an open loop

By: John X. Wang

Subjects: Engineering - Electrical

One type of switched-mode power supply topology is the flyback converter (SMPS). In switching power supply designs, the input voltage is rectified and filtered at the input rather than being decreased. After that, a chopper transforms the voltage into a high-frequency pulse train. The voltage is filtered and rectified one more before it gets to the output.

The flyback converter is an isolated power converter. The two prevailing control schemes are voltage mode control and current mode control. In the majority of cases, current mode control needs to be dominant for stability during operation. Both modes require a signal related to the output voltage.

There are two possible designs for the flyback converter:

Open-loop Flyback Converter: Unlike closed-loop flyback converters, which have a feedback circuit, open-loop flyback converters do not have feedback from output to input. Thus, an open-loop flyback converter's output is unregulated.

Closed loop Flyback Converter: An input and an output have feedback in a closed loop flyback converter. Thus, a closed-loop flyback converter's output is controlled.

The design of the Flyback converter involves several design criteria. It's critical to comprehend these design constraints. Either of the two operational modes is possible for every Flyback converter. These are the following ways of operation.

Continuous Conduction Mode (CCM): During the whole switching period, the inductor's current is continuous in CCM. As a result, a regulated voltage is obtained at the output; however, the output is only regulated when the current is drawn within CCM's bounds.

Discontinuous Conduction Mode (DCM): In this mode, the inductor's current pulses and briefly drops to zero throughout the switching process. Therefore, DCM does not receive a controlled voltage. Nonetheless, by joining an input to an output feedback circuit, the voltage can be controlled.

The following SMPS circuits are designed in this article:

The first type of boost converter is the following:

a) open loop;

b) closed loop;

c) open loop with adjustable output;

d) closed loop with adjustable output.

2. Buck Converters:

a) Buck Converter with Open Loop;

b) Buck Converter with Closed Loop;

c) Buck Converter with Open Loop and Adjustable Output;

d) Buck Converter with Closed Loop and Adjustable Output.

3. Converters with Buck-Boost

a) Buck-Boost Converter in Open Loop for Inversion

b) Buck-Boost Converter in Open Loop for Inversion with Adjustable Output

4. Converting to Flyback

5. The Push-Pull Switch

Similar to a Buck-Boost DC-to-DC converter, a Flyback converter yields an output voltage that can be either higher or lower than the input supply voltage. It operates on the principle of switching regulators since it is one of the topologies of the Switched Mode Power Supply (SMPS).

Except for the flyback converter's isolated winding inductance, both models are comparable to the Buck-Boost converter. The Flyback converter's output voltage is non-inverting because of its separate winding. The result is a positive output voltage.

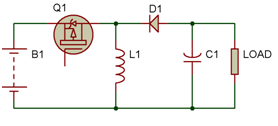

Figure 1: Typical Buck-Boost Converter Circuit Diagram

Figure 2: Flyback Converter Circuit Diagram with Isolated Winding Shown

Figure 3: Circuit Diagram Displaying Flyback Converter Diode Connection Change

Flyback converters use switching regulators as opposed to linear regulators. Because of this, heat dissipation does not change the voltage, and these converter circuits are subject to the law of power conservation. Input power and output power must be equal, per the law of power conservation.

This article designs an isolated flyback converter, meaning that the ground planes of the input and output are separate.

The project's flyback converter will have an output that can be adjusted between 12 and 5 volts DC. A multimeter will be used to measure the output voltage and current of the circuit after it has been developed and put together. These figures will show how effective the flyback converter that was planned for the project is.

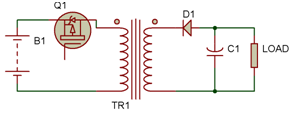

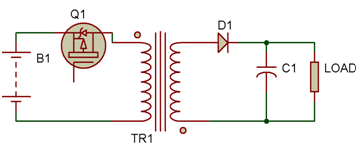

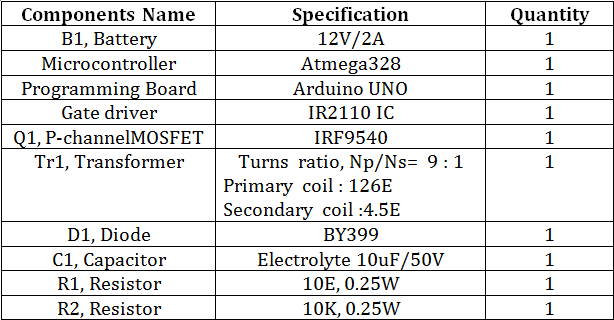

Figure 1. Component List Needed for Open Loop Isolated Flyback Converter

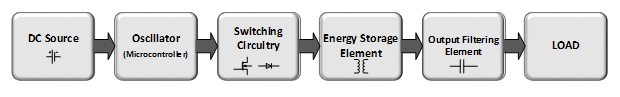

Block Diagram

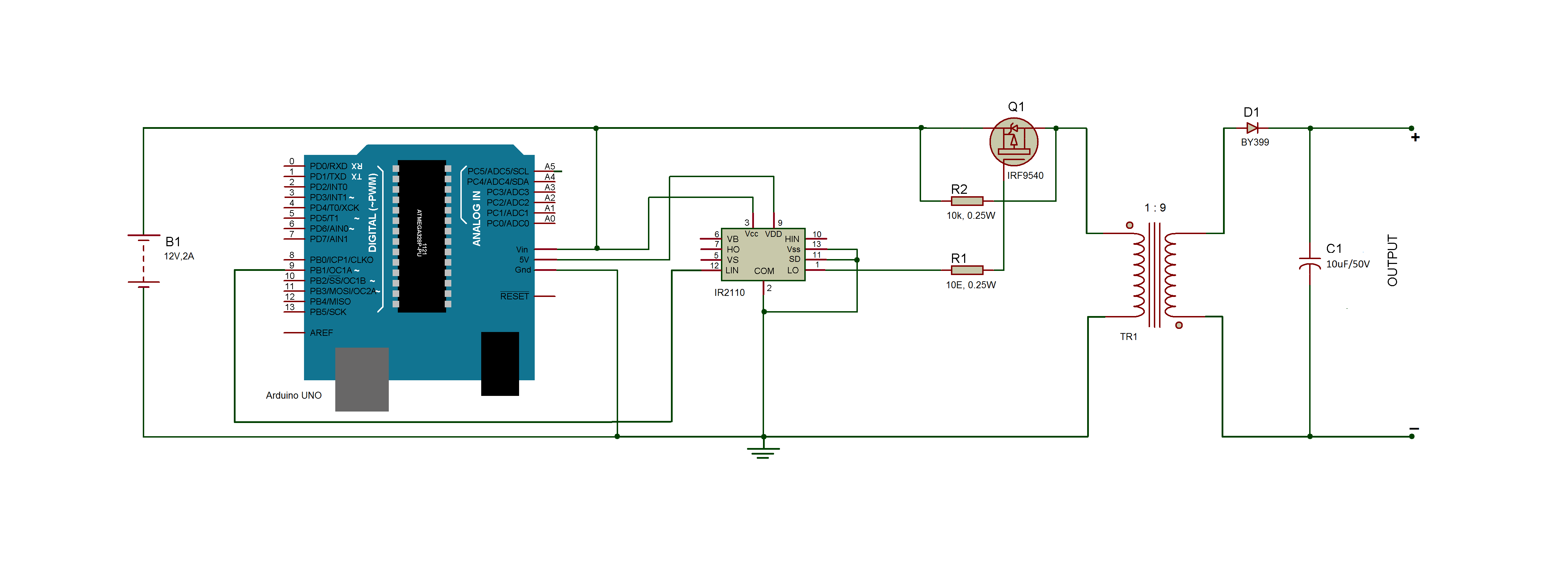

Figure 2: Open Loop Isolated Flyback Converter Block Diagram

Connections in Circuits

This assignment involves designing an open loop flyback converter that operates in CCM mode and calculating the component values for the desired output using the CCM standard equations.

Circuit blocks for the Flyback converter include the following:

1. DC source: The circuit's input power source is a 12V battery.

2. Oscillator and Switching Mechanism

The transistor and diode are the switching components utilized in switching.

The frequency at which the switching components must function is required. An oscillator circuit produces this frequency. The Arduino UNO is used in this project to generate a PWM signal that supplies the necessary frequency. You can also use any other Arduino board, such as the Arduino Mega. The circuit can be implemented with any microcontroller or microcontroller board that can output PWM. Since Arduino is the most widely used prototyping board and is simple to program, it was selected. It's simple to learn and operate with Arduino because of the strong community support.

A transistor and a diode are employed as switching components to accomplish switching. Given that FETs are renowned for their quick switching speeds and low RDS (ON) (drain to source resistance in the ON state), MOSFETs are the transistor of choice. The MOSFET is connected in a high-side arrangement in this setup. The driver is more complex since, like on the high side, the N-channel MOSFET needs a Gate Driver IC or Bootstrap Circuitry for its triggering. The circuit uses a P-channel MOSFET (shown as Q1 in the circuit diagram), which has a higher Rds (On) than an N-MOS but does not require a gate driver on its high side. Further power loss follows from this.

The circuit's MOSFET has a threshold voltage of between 10 and 12 volts.

The MOSFET and diode switching times should be shorter than the PWM wave's rise and fall times. Both the MOSFET's RDS (ON) and the diode's forward bias voltage drop should be minimal. It is always best to utilize gate-to-source resistance to prevent the MOSFET from being unintentionally triggered by outside noise. By releasing the parasitic capacitance of the MOSFET, it also aids in quickly turning it off. At the MOSFET gate, a resistor with a low value (10E to 500E) can be utilized. This will address the MOSFET's inrush current issue as well as ringing, or parasitic oscillations. The PWM signal's voltage level ought to surpass the MOSFET's threshold voltage for the MOSFET to be fully turned on with the least amount of RDS (ON).

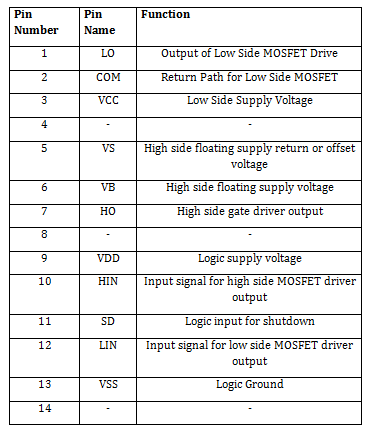

The microprocessor can only provide a 5V PWM signal, hence it cannot activate the MOSFET. As a result, the design uses an additional integrated circuit (IC) called IR2110 to produce a 12V PWM signal. The microcontroller supplies the IR2110's input. A high and low side driver is IR2110. It is an independent high- and low-side referenced output channel high-speed (high-frequency operation) power MOSFET and IGBT driver. Up to 500V or 600V can be used to run the floating channels. The integrated circuit can be utilized with any microcontroller since it is compatible with 3.3V logic. There is a 14 Lead PDIP package with the IC. The pin arrangement of the IR2110 is as follows.

Figure 3: Table listing the IR2110 IC pin arrangement

A diode is another switching element that the circuit uses. The diode's switching time needs to be shorter than the PWM wave's rise and fall times. A PWM wave with a rising time of 110 ns and a fall duration of 90 ns is produced by the Arduino board. Additionally, the diode's forward voltage drop must be extremely low to prevent power dissipation and further decrease the circuit's efficiency. Both the MOSFET's RDS (ON) and the diode's forward bias voltage drop should be minimal. Thus, a BY399 diode is used for this experiment since it best fits the circuit architecture.

The circuit's switching frequency must be chosen before producing the PWM signal. A switching frequency of 10 kHz was chosen for this flyback converter because any higher than this will cause the transformer in the circuit to become saturated.

Another crucial factor to take into account is the generated PWM signal's duty cycle, which determines the MOSFET's active state. This is how the duty cycle can be computed.

Vin *(D/(N*(1-D)) = Vout

where the input voltage is Vin = 12V and the desired output value

is Vout = 5V.

Ns = Number of transformer secondary turns,

N = Turns Ratio

Np = Number of primary turns in the transformer

9/1 D is the duty cycle. Np/Ns

When all the values are entered, D = 0.8, or 80%

The Arduino board is programmed to produce a 10 kHz PWM signal with an 80% duty cycle. The article includes the Arduino sketch needed to produce the appropriate PWM output. To use it, download it and burn it to an Arduino board.

Switching losses increase with increasing frequency chosen for switching components. The SMPS's efficiency is lowered as a result. High switching frequency, however, increases the output's transient response and minimizes the size of the energy storage unit.

3. Energy Storage Element

To store energy as a magnetic field with Np/Ns = 9/1, a transformer is utilized. The transformer functions as an Energy Storage Element as a result. To choose the right transformer, one must compute the peak current in the primary. This is how it can be calculated.

4*(Io(max))/(3*N*(1-D)) equals Ipeak.

Maximum Output Current = Io(max)

With Io(max) = 50mA in mind

Entering all values yields Ipeak = 36mA (approximately).

The circuit uses a transformer with a higher current rating determined above and Np/Ns = 9/1. To get the desired current at the output, the primary current rating of the transformer needs to be higher than the peak current. It is important to select a transformer that can maintain the required switching frequency.

4. Output Filtering Element

A capacitor denoted as C1 in the circuit design, is utilized as

a filtering element at the circuit's output. Transistor Q1 in a

Flyback Converter circuit normally operates by turning ON and OFF

by oscillator circuit frequency. This causes a train of pulses to

be produced at transistor Q1, capacitor C1, and inductor L1. Both

the positive and negative cycles of the PWM signal have an inductor

connected to the capacitor. In doing so, an LC filter is created,

which filters the pulse train to provide a smooth DC at the output.

The following formula for CCM can be used to determine the

capacitor's value: Cmin >= (Irms)/(8*Fs*DVo).

where Cmin is the minimal

Frequency of switching, Fs = 10 kHz

DVo = Voltage ripple at output

Presuming 100 mV for DVo

RMS current equals Irms = (D*(Ipeak)2)1/2 Irms = (0.8*(0.036)2) 1/2 Irms = around 32 mA

Putting all the values together now, Cmin >= 4uF

Since 10 uF is the smallest value of capacitor that is needed, a standard value capacitor that is widely accessible is utilized in the circuit.

The estimated value should not be less than or equal to the value of the capacitor. for the output to have the appropriate voltage and current. The circuit's capacitor needs to have a voltage rating greater than the output voltage. If not, the extra voltage at the capacitor's plates will cause it to leak current and eventually rupture. It is necessary to discharge all of the capacitors before beginning any DC power supply application. To accomplish this, short the capacitors with a screwdriver while wearing insulated gloves.

The Circuit's Operation

A switching machine power supply (SMPS) consists of switching components that operate at a high frequency and storage components that store electrical energy during the conduction state of the switching components and release it to the output device during the non-conduction state of the switching components.

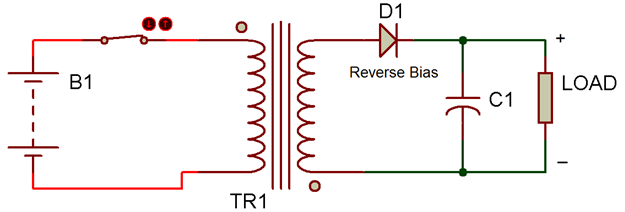

A transformer (TR1), a capacitor (C1), a transistor (which functions as a switch), and a diode (D1), which functions as a second switch, make up the Fly-Back converter. The primary coil of the transformer is directly linked to the input source when the switch is closed, at which point it begins to accumulate energy. As a result, the transformer's secondary experiences a voltage with the opposite polarity.

Figure 4: Circuit Diagram illustrating the Flyback Converter's switching component in the ON state

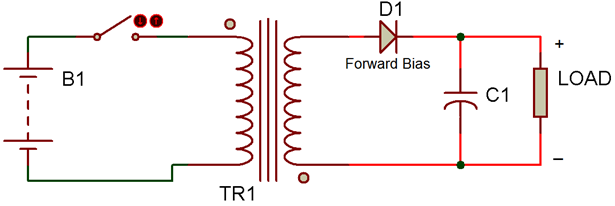

The primary winding's current decreases to zero when the switch is open, severing the source's connection to the circuit. The transformer's secondary winding begins to receive the release of stored energy from the magnetic core. As a result, the diode becomes forward-biased, and current is now supplied to the load via diode D1 by the secondary winding. The output voltage begins to decrease when the inductor's stored charge begins to decrease. However, at this point, the capacitor takes on the role of a current source and continues to supply the load with current until the next cycle, or until the ON state starts.

Figure 5: Circuit Diagram illustrating the Flyback Converter's switching component in the OFF state

The duty cycle (D) and transformer turn ratio in this particular Fly-Back Converter arrangement determine whether the converter steps up or down the input voltage.

Examining the Circuit

The output of this flyback converter can be adjusted between 12 and -5 volts DC.

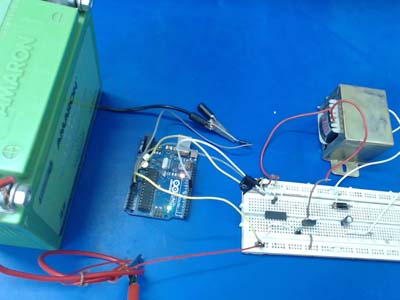

Figure 6: A breadboard-designed prototype of an open-loop flyback converter

The input voltage in this circuit is Vin = 12V.

Battery Voltage, Vin = 11.8V, practically

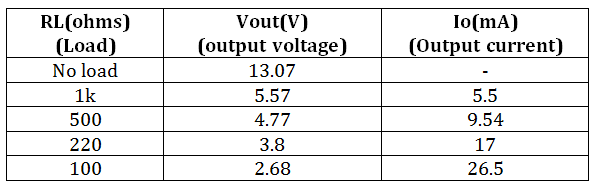

The following observations were made when measuring voltage and current values at the output with various loads.

Figure 7: Table displaying the Open Loop Flyback Converter's output voltage and current for various loads

Thus, it can be seen that with a tolerance limit of +/-0.5V, a current of 9.54 mA can be pulled at 4.77 V output.

The following formula can be used to determine the circuit's power efficiency at a maximum output current of 9.54 mA for a 5V output:

Efficiency% is equal to (Pout/Pin) * 100 (Input Power).

Pin (Output Power) = Vin*Iin Pout (Output Voltage) = Vout*Iout Vout (output current) = 4.77V Iout is 9.54 mA.

Pout (about) = 46 mW (input voltage) Vin (Input Current) = 11.8V Iin = 6.3 mA (ammeter reading for input current)

The pin is equal to 74 mW.

After adding together all the numbers, Efficiency % equals 62%.

This circuit has some restrictions. This circuit's output voltage fluctuates depending on the load resistance; it is not controlled. By including feedback circuitry, which aids in controlling the output voltage, this can be made better. There are losses in the windings surrounding the core, eddy current and hysteresis losses in the inductor, switching, and conduction losses of the MOSFET and diode, losses in the capacitor because of the equivalent series resistance, and losses because of the high Rds(on) of the P-MOS.

This flyback converter operates in CCM mode and has an open loop, non-isolated output. It can be used to power portable self-powered gadgets or drive LEDs as a low-loss current source.

Project Source Code

###

//Program to

/*

Code for Flyback with Input voltage = 12V and Output voltage = 5V

This code will generate a PWM (Pulse Width Modulation)signal of 10kHz with 20% duty cycle

but the desired duty cycle is 80%.

As in my experiment, I am using a P-channel MOSFET, PMOS is triggered by negative voltage.

So we need to set the duty cycle of the PWM signal to 20% so that the duty cycle of the MOSFET can be 80%

*/

#define TOP 1599 // Fosc = Fclk/(N*(1+TOP), Fosc = 10kHz, Fclk = 16MHz

#define DUTY_CYCLE 320 // OCR1A value for 20% duty cycle

#define PWM 9 // PWM(Pulse Width Modulation) wave at pin 9

void setup() {

//Put your setup code here, to run once:

pinMode(PWM,OUTPUT); // set 9 pin as output

TCCR1A = 0; //reset the register

TCCR1B = 0; //reset the register

TCNT1 = 0; //reset the register

TCCR1A |= (1<<com1a1); set="" output="" at="" non="" inverting="" mode="" tccr1a="" |="(1<<WGM11);" selecting="" fast="" pwm="" icr1="TOP;" setting="" frequency="" to="" 10khz="" tccr1b="" cs10)|(1<<wgm12)|(1<<wgm13);="" timer="" starts="" ocr1a="DUTY_CYCLE;" 20%="" duty="" cycle="" delay(1000);="" delay="" for="" compensating="" the="" hardware="" transient="" time="" with="" software="" }="" void="" loop()="" {="" put="" your="" main="" code="" here,="" run="" repeatedly:="" }<="" pre="">

###

</com1a1);>

Schematics for Circuits

Dr. John Wang's work on Functional Safety and SOTIF of Autonomous Vehicles and Autonomous Drining

By: John X. Wang

Subjects: Biomedical Science, Business & Management, Computer Game Development, Engineering - Electrical, Engineering - General, Engineering - Industrial & Manufacturing

Functional Safety Assessment (FSA)

Functional Safety Assessment (FSA) according to ISO 26262. Part 2 – Clause 6.12

Eight Key ISO-26262 Templates for Compliance

What Every Engineer Should Know About Formal Contract Logic for ISO 26262 Compliance

What Every Engineer Should Know About Defeasible Logic for ISO 26262’s Compliance Checking

ISO 26262 Part 6 Software Safety

ISO 26262 Part 6 Section 5 Product Development At The Software Level

ISO 26262 Part 6 Section 8 Software Unit Design And Implementation

ISO 26262 Part 6 Section 9 Software unit verification

ISO 26262 Part 10 Software integration and verification

Which strategy results in error-free automotive software?

Software FMEA: a tool for Industrial Design Engineering’s Inventive Problem Solving

Software FMEA: a tool for Industrial Design Engineering’s Inventive Problem Solving

Back-to-Back Testing based on ISO 26262

Best Practices for Regression Test based on ISO 26262 and ASPICE (Automotive SPICE)

Four questions to be answered to determine methods for Unit Testing based on ISO 26262

Nine questions to be answered to establish test cases for unit testing based on ISO 26262

Development of software integration testing for electric power converters based on ISO 26262

How to Conduct System Level Integration Testing in the Automotive Industry (ISO 26262)

How to Conduct Automotive Integration Testing (ISO 26262) at the Subsystem Level?

Item Integration Testing based on ISO 26262

A Tribute to Dr. Nikola Tesla: Best Inventive Problem Solving (IPS) Practices for Embedded Software Testing

How Does Tessy Tool Automate Automotive Software Integration of an Electric Vehicle on the Big Island?

ISO 26262 Product development: Work Products

ISO 26262 Part 4 Product development at the system level: Work Products

ISO 26262 Part 5 Product development at the hardware level: Work Products

ISO 26262 Part 6 Product development at the software level: Work Products

ISO 26262 Part 8 Supporting

ISO 26262 Part 8 Supporting processes Qualification of software components: work products

ISO 26262 Part 8 Supporting processes Clause 11 Confidence in the use of software tools: work products

ISO 26262 Part 8 Supporting processes Evaluation of hardware elements: work products

ISO 26262 Part 6 Annex B (informative) Model-based development approaches

Model-Based Design of Safety-Related Software based on ISO 26262

Developing Safety-Related Software Using a Model-Based Reference Workflow

10 Steps to develop Model-based Software that Complies with ISO26262

Model Based Software-in-the-Loop Testing of Closed-Loop Automotive Software

ISO 26262 Part 8 Software Tool Qualification

Software Tool Qualification in ISO 26262 Based Software Development

Annex D (informative) Freedom from interference between software elements

Annex E (informative) Application of safety analyses and analyses of dependent failures at the software architectural level

ISO 26262 Part 9 Dependent Failure Analysis (DFA)

Freedom from Interference Analysis for Automotive Operating System

Autonomous Driving Computing Architecture

Functional Safety of Autonomous Travel: Seeing the Road Through the LIDAR Lens

Radar and Functional Safety technology for Advanced Driving Assistance Systems (ADAS)

Inventive Problem Solving: decouple software and hardware development of autonomous driving

Will Autonomous Cars Look Like Unmanned Aerial Vehicles (UAVs)?

Dynamical Movement Primitives (DMPs) for Safety Engineering

Multi-Core Processing (MCP)

Functional Safety of Multi-Core Processing

Multi-Core Processor Functional Safety Considerations for Autonomous Vehicles

ISO 26262 Safety Architecture

New Hall Sensor Architecture Boasts Functional Safety

An effective functional safety infrastructure for fault-tolerant quantum computing

ISO 26262 – The Second Edition

Industrial Design Engineering: Inventive Problem Solving for ISO 26262 – The Second Edition

Happy New Year! What Every Engineer Should Know about ISO 26262:2018

Defining Safety Requirements During New Product Development (NPD)

What Risks might be involved on the road of being Hamlet’s ally?

What Every Engineer Should Know About Fail Silent

Functional safety: selected standards, architectures, and analysis

Functional Safety for in Green Electronics Manufacturing

Integrated Platform for Autonomous Vehicle Defined by Software and Its Functional Safety

Utilizing Recurrent Neural Networks (RNNs) and Bayesian networks to assess driver fatigue in autonomous driving

Machine Learning's Application In Autonomous Vehicles

Developing safety-critical ASICs for ADAS and similar automotive systems

Driver monitoring technologies

Human Errors and Autonomous Driving

Developing a Clear Interface for Control Transfer in a Level 2 Automated Driving Systemed technology

Scenic Drive of Kohala Mountain Road with a Self-Driving Car on the Big Island

Scenic Drive with a Self-Driving Car: Old Mamalahoa Highway, Big IslandScenic Drive with a Self-Driving Car: Highways 11 and 19, Big Island

Scenic Drive with a Self-Driving Car: Mauna Lani Drive, Big Island

Scenic Drive with a Self-Driving Car (Big Island): Sunsets and stargazing

Scenic Drive with a Self-Driving Car (Big Island): Hike to hidden waterfalls

Scenic Drive with a Self-Driving Car (Big Island): Swim with gentle giants

Happy 4th of July: Scenic Drive of Lava Fields with a Self-Driving Car (Big Island)

Scenic Drive of Big Island Circle Route – Hawaii

Scenic Drive of Kona and the Coffee Coast with a Self-Driving Car – Big Island

Scenic Drive of Volcanoes National Park with a Self-Driving Car – Big Island

Pepe’ekeo Scenic Drive with a Self-Driving Car – Big Island

Pololu Valley Lookout Scenic Drive with a Self-Driving Car – Big Island

Big Island: Coffee Country (South Kona) Scenic Drive with a self-driving car

Big Island: Kapoho Kalapana Road Scenic Drive with a self-driving car

What Every Engineer Should Know About UL 4600 “Standard for Safety for the Evaluation of Autonomous Products”

Design Constraints for an autonomous driving system

A Tale of Autonomous Driving towards Functional Safety

Industrial Design Engineering Project: DIY an autonomous spraying system

Year 2020, shall we start to see AI-based Autonomous Vehicles on the road?

Emerging technique to engineer a safe and robust Autonomous Vehicle

Year 2019: moving towards achieving Autonomous Vehicle’s Functional Safety

Year 2019, the Robots Are Coming with Fault Tolerant System Architecture

Functional Safety: Risk Mitigation of Deep Neural Network for Autonomous Driving

Design for Diagnosability

Design for Diagnosability

ISO 21448 SOTIF (Safety Of The Intended Functionality)

What Every Engineer Should Know About Designing AI enabled System with SOTIF (Safety Of The Intended Functionality)

What Every Engineer Should Know About SOTIF: fine tuning highly automated vehicle and automated vehicle safety

What Every Engineer Should Know About Safety Of The Intended Functionality (SOTIF) and ISO/PAS 21448

What Every Engineer Should Know About Safety Of The Intended Functionality (SOTIF)

Robust Designs, Inventive Problem Solving, and Safety of the Intended Functionality (SOTIF)

Risk Engineering: Comparing Health Systems Part 2

By: John X. Wang

Subjects: Biomedical Science, Business & Management

Since this statement contains a composite set of aims, every component of this statement of purposes or goals is pertinent to our study. And we can envision how a healthcare system could accomplish this wide range of objectives.

What objectives make up the composite set? The first is the most flexible and promotes better health. In this context, enhancing health encompasses enhancing the well-being of specific persons. Because it's enhancing our health, that's how we typically view health care. However, it also has to do with enhancing public health.

Thus, we will examine healthcare systems in terms of their ability to enhance public and individual health. Enhancing health equity is a goal that is part of the larger objective of increasing population health. This entails evaluating health systems' ability to guarantee that every segment of the population can obtain healthcare. This entails addressing the issue of whether health systems respond to disadvantages resulting from socioeconomic status, race, gender, or class when granting access to healthcare. This is significant and helpful since it will become clear later in the lesson. We shall examine the healthcare system in light of their contributions. These are the inputs required by a healthcare system to generate a specific set of results.

One of the results we'll examine while examining the relationships between health inputs in health systems is how responsive the system is to the populations it serves. That is, whether or not each health care system produces equitable results.

This entails determining whether health systems are adaptable to the requirements of communities in specific locations or about certain ailments or disorders. The ability of a healthcare system to adapt to the demands of the populace will directly affect whether or not the goal of enhancing population health is met.

In this case, financial fairness requires a complete shift in perspective because the issues at hand go beyond merely enhancing health and health equity or determining how responsive the healthcare system is. In this context, financial fairness refers to lowering the amount of money that each patient must pay out-of-pocket to receive healthcare services. This is significant because, for most of the population, a system based upon out-of-pocket payments will assure that the bulk of the people will not have appropriate or equal access to health care. As we examine the Chinese health system, later on, we shall see.

Ensuring that the general public has access to high-quality healthcare services when and when they are needed is the goal of access to healthcare.

A further way to improve access to healthcare is to guarantee a decrease in the amount of money that patients must pay out of pocket.

Optimizing the healthcare system's resource utilization is equally crucial. We'll be talking about the standard of care here. We are talking about whether or not the population obtains care that is suggested, that is, whether or not the specific groups receive effective care. This entails determining whether specific populations of individuals receive needless care, which could raise the risk of injury and undoubtedly raise healthcare costs.

Risk Engineering: Comparing Health Systems Part 1

By: John X. Wang

Subjects: Business & Management

We examined what we meant by comparative analysis early in the series. Here, I want to pay close attention to what we mean when we talk about health systems in this section of the course. The intrinsic complexity of health systems makes this imperative. Any system, even a microsystem, is made up of a very intricate web of connections between employees, supervisors, suppliers, and patients. At the macro system level, there is an enormous amount of complexity.- It is feasible to interact in ways that are neither really beneficial nor helpful with an endless list of those interactions. This is especially important to keep in mind when conducting comparative analysis.

A systems analysis does, however, give us a focal point for conducting a comparison study. A system is defined as a collection of components that are logically arranged and linked together in a pattern or structure to produce traits and behaviors that are frequently categorized as part of the system's function or purpose.

Now, this may seem like a fairly simplistic definition. It merely describes a collection of how its components interact. However, because it outlines the connections between the components and their intended functions, it is incredibly helpful. Therefore, when comparing health systems, we will be evaluating the ways in which those systems fulfilled their objectives.

What the health care system is and why it exists is one of the questions we might ask ourselves. All institutions, individuals, and behaviors whose main goal is to advance or restore health are considered to be part of the healthcare system. Since the health system is defined in relation to health, this description once more seems circular.

However, a closer examination of what we mean when we talk about health promotion shows several significant objectives. The World Health Organization's definition of a healthcare system's objectives. Nevertheless, this can be defined as enhancing health and health equity in ways that are equitable financially, responsive to needs, and make the best or most efficient use of available resources.

However, a closer examination of what we mean when we talk about health promotion shows several significant objectives. The World Health Organization's definition of a healthcare system's objectives. To put it another way, this can be defined as enhancing health and health equity in methods that are resource-efficient, financially fair, and responsive.

Since this statement contains a composite set of aims, every component of this statement of purposes or goals is pertinent to our study. And we can envision how a healthcare system could be able to accomplish this wide range of objectives. However, a closer examination of what we mean when we talk about health promotion shows several significant objectives.

The World Health Organization's definition of a healthcare

system's objectives. To put it another way, this can be defined as

enhancing health and health equity in methods that are

resource-efficient, financially fair, and responsive.

Start the video at 2:19 and watch the transcript that follows.

Since this statement contains a composite set of aims, every

component of this statement of purposes or goals is pertinent to

our study. And we can envision how a healthcare system could be

able to accomplish this wide range of objectives.

Risk Engineering of Healthcare Systems: Leadership and Governance in the United States

By: John X. Wang

Subjects: Biomedical Science

We will go over each of the components that comprise the US healthcare system and how they work together to achieve outcomes, especially population health outcomes, in the remaining portion of this series of articles. The World Health Organization is composed of six main components. These include

- workforce,

- information,

- finance,

- pharmaceuticals and

- medical products,

- leadership and governance, and

- service delivery. We will go over the basic blocks of government and leadership

The most intricate yet vital component of any health system is undoubtedly its leadership and governance, often known as stewardship. It concerns the government's role in health and how it relates to other initiatives that affect health. Protecting the public interest entails managing and directing the entire healthcare system, both public and private.

The most important aspect of this description is the mention of the government directing the entire system, both public and private. And it is in this regard that one will observe certain differences in the US healthcare system.

The main way that the federal, state, and local governments in the US have an impact on the healthcare system is by creating arrangements to support the insurance system, which gives different subpopulations in the country access to healthcare. Medicaid serves the underprivileged and impoverished, whereas Medicare is for the elderly. For people without access to employer-sponsored health insurance, there is a direct private insurance system as well as employer-sponsored health insurance.

Furthermore, specific healthcare systems are maintained by the federal government for specific communities, specifically Native Americans and veterans.

- Medicare for the Elderly provides coverage to almost 42 million people. is at the top.

- Medicaid serves about 65 million low-income and disadvantaged individuals.

- Employer-provided health insurance, which covers about 156 million individuals, or roughly 49% of the population, is the biggest source of health insurance.

- There are 27 million uninsured people who are not represented.

The relationship between the US insurance system and the US healthcare delivery system determines the role that the US government plays in directing the US health system and how difficult it is to comprehend that involvement.

Physicians are essential to the provision of healthcare access. Not only does this pertain to primary care access, but doctors also facilitate access to hospitals and outpatient care. The United States has a very vast hospital system. Mostly not for profit, however, some are, as well as some public hospitals. Additionally, there is a substantial network of outpatient doctors.

To provide patients with healthcare, physicians, hospitals, and other healthcare providers establish intricate networks with different insurance companies. Furthermore, the relationship between the insurance and healthcare providers is what adds complexity to the healthcare system. The government plays two main roles in this.

First, it employs a financial insurance system to control the delivery of healthcare, thereby raising the standard of care and, for some subpopulations, improving quality results.

The government employs regulation, probably most crucially, to make sure that patients, payers, and healthcare providers interact within a framework of competitive markets. And this is the most significant feature of the US healthcare system. The regulation of relationships among insurers, providers, and patients is contingent upon competition.

The US legal system has a similar characteristic to the loosely connected polycentric architecture of the US health care system. In the book of the same name, Professor Robert Kagan describes the Adversarial Legalism legal system in the United States. The implementation of the Affordable Care Act was significantly impacted by legal challenges to its constitutionality.

The second characteristic of American law and government is that it depends on less closely linked decision-making processes and is more politically dispersed.

This refers to interactions among federal, state, and municipal governments as well as between the federal government and stakeholders. Thus, there is a tight relationship between a disjointed healthcare system with loosely connected pieces on the one hand, and a judicial system with fragmented authority and weak hierarchical authority on the other. The significance of this difference will become evident in later articles when we examine the healthcare systems in Germany and England. In such nations, the government can rely on hierarchical legal frameworks to direct the operations of the entire health system.

What therefore can we say about the functions of all the leadership and governance pillars in the US healthcare system?

Forty-five percent of the money spent on the health care system comes from the federal and state governments. This is important to note: no government in the United States is in charge of the system's overall design or its overarching direction. Governments are focused on enhancing the quality and accessibility of healthcare for certain subpopulations, not the general populace.

We'll go over each of the other WHO building blocks in the remaining portion of this series of articles. Additionally, examine how those components interact to link population health outcomes and the healthcare system.

Risk Engineering of Healthcare Systems: Health Spending vs. Social Spending

By: John X. Wang

Subjects: Biomedical Science

There have been two extremely significant statements about US priorities.

- Firstly, the relationship between health spending and social

spending is exactly the opposite in France and Sweden, which have

higher levels of social spending than the US.

- In each of those nations, the percentage of spending on social

programs is far larger than the percentage on health care. What

does it indicate to us? It indicates that efforts to redistribute

wealth and resources to the underprivileged in order to improve

population health outcomes overall are linked to high levels of

social spending in those nations. These nations also often have

lower rates of wealth inequality and allocate resources through

social and health spending to improve population health.

- The objective of incorporating health spending into general

social spending patterns is also reflected in the allocation's

spending. What does that signify then? It implies that poverty in

all areas is experienced in a multifaceted manner and that any

attempt to reduce it requires a multifaceted set of solutions.

- For instance, in nations with universal health care, addressing a problem like domestic abuse would essentially include offering wraparound services. In other words, while domestic violence results in a need for healthcare assistance, it also creates a need for housing, legal counsel, and financial support.

- The intention is to include health services in the list of resources that a woman and her family require at that specific time to address the problem of domestic abuse.

- The objective of incorporating health spending into general

social spending patterns is also reflected in the allocation's

spending. What does that signify then? It implies that poverty in

all areas is experienced in a multifaceted manner and that any

attempt to reduce it requires a multifaceted set of solutions.

- In each of those nations, the percentage of spending on social

programs is far larger than the percentage on health care. What

does it indicate to us? It indicates that efforts to redistribute

wealth and resources to the underprivileged in order to improve

population health outcomes overall are linked to high levels of

social spending in those nations. These nations also often have

lower rates of wealth inequality and allocate resources through

social and health spending to improve population health.

- Secondly, on a larger scale, the issue may be as straightforward as figuring out how to combine the health system with housing, income, and support services so that they function together rather than separately.

Here are the questions?

- What then do we know about those two topics in terms of the United States?

- What data are available to reveal about US priorities concerning the subject of resource redistribution as well as the integration of the healthcare system into larger social support networks?

Here are being noted:

- Firstly, despite being one of the nations with the highest levels of social spending, the United States is a country with relatively high social spending. Nevertheless, social spending in the US is not devoted to equitable resource redistribution. In the United States, a larger percentage of social and health spending is entitlement-skewed, meaning that resources are not as concerned with being redistributed to those in need.

- Secondly, less focused on redistributing resources to enhance the general health of the populace. Second, there is a marked lack of commitment to integrating the health system with social support services, which is a manifestation of the same lack of commitment to reallocating resources to promote population health. So it's quite difficult to imagine in the United States. For instance, a state- or federally-level wraparound system of assistance for victims of domestic abuse, incorporates all dispersed, fragmented resources.

In contrast, the health system in the United States typically serves as a means of resolving concerns related to poverty. Thus, part of the creative work being done to reduce poverty in the US through Medicaid focuses on making sure that those with medical requirements also have their housing needs satisfied, or that those who are food insecure and in need of healthcare also have their nutritional needs satisfied.

That's crucial because it affects the people who receive those services, but it also shows that there is a health system in place that provides funds for the advancement of health. However, it provides financing for enhancing the health of certain groups of people as well as for individuals, not the general public. Thus, the country's strength in leveraging the health system to reduce poverty is counterbalanced by its weakness, which is the challenge it faces in combining social service and health spending in a way that improves population health outcomes.

Summary

It was, in essence, a section of a book written by Elizabeth Bradley and Lauren Taylor titled The American Health Care Paradox, which developed the idea that nations with the worst population health outcomes would also be those that spend as much or more on healthcare than on social services. That is where we began to address the healthcare system in the United States. In other words, population health outcomes are poorer despite high health spending.

Thus, this on-gong discussion reflects priorities regarding the general redistribution of resources aimed at enhancing population health, as well as priorities regarding the challenges associated with integrating health and social systems.

Dr. John X. Wang's book: Top New Release in Industrial Engineering

By: John X. Wang

Subjects: Business & Management, Computer Game Development, Computer Science & Engineering, Disaster Planning & Recovery , Emergency Response, Energy & Clean Technology, Engineering - Chemical, Engineering - Civil, Engineering - Electrical, Engineering - Environmental, Engineering - General, Engineering - Industrial & Manufacturing, Engineering - Mechanical, Engineering - Mining, Ergonomics & Human Factors, Healthcare, Homeland Security, Information Technology, Life Science, Materials Science, Mathematics, Nanoscience & Technology, Occupational Health & Safety, Physics, Statistics

Dr. John X. Wang's book: Top New Release in Industrial Engineering

Amazon.com New Releases: The best-selling new & future releases in Industrial Engineering

Completely updated, this new edition uniquely explains how to assess and handle technical risk, schedule risk, and cost risk efficiently and effectively for complex systems that include Artificial Intelligence, Machine Learning, and Deep Learning. It enables engineering professionals to anticipate failures and highlight opportunities to turn failure into success through the systematic application of Risk Engineering. What Every Engineer Should Know About Risk Engineering and Management, Second Edition discusses Risk Engineering and how to deal with System Complexity and Engineering Dynamics, as it highlights how AI can present new and unique ways that failures can take place. The new edition extends the term "Risk Engineering" introduced by the first edition, to Complex Systems in the new edition. The book also relates Decision Tree which was explored in the first edition to Fault Diagnosis in the new edition and introduces new chapters on System Complexity, AI, and Causal Risk Assessment along with other chapter updates to make the book current.

Features

* Discusses Risk Engineering and how to deal with System Complexity and Engineering Dynamics

* Highlights how AI can present new and unique ways of failure that need to be addressed

* Extends the term "Risk Engineering" introduced by the first edition to Complex Systems in this new edition

* Relates Decision Tree which was explored in the first edition to Fault Diagnosis in the new edition

* Includes new chapters on System Complexity, AI, and Causal Risk Assessment along with other chapters being updated to make the book more current

The audience is the beginner with no background in Risk Engineering and can be used by new practitioners, undergraduates, and first-year graduate students.

How to prevent wildfires as the one in Maui (Hawaii): "need Prognostic Models for Prediction, "Dr. John Wang said

By: John X. Wang

Subjects: Disaster Planning & Recovery

A security camera at the Maui Bird Conservation Center captured a brilliant flash in the woods around 10:47 p.m. last Monday, illuminating the trees swinging in the wind. "I believe that is when a tree falls on a power line," Jennifer Pribble, a senior research coordinator at the institution, says in an Instagram video.

Although no one reason has been identified, experts believe that active electricity lines that toppled in severe winds may have caused a wildfire that eventually devastated Lahaina. On August 8, brush fires were already raging on Maui and the island of Hawaii.

Maui County officials assured residents that morning that a small brush fire in Lahaina had been extinguished. Still, they later issued an advisory describing "an afternoon flare-up" that necessitated evacuations.

The fires on the islands were fueled by a mix of low humidity and strong mountain winds caused by Hurricane Dora, a Category 4 storm in the Pacific Ocean hundreds of miles to the south.

Drought conditions have likely worsened in recent weeks as well. According to the U.S. Drought Monitor, about 16% of Maui County was under extreme drought a week ago.

Having power equipment that could not withstand heavy winds and keeping power lines electrified despite warnings of high winds is a major issue causing the wildfire.

It adds to evidence that the state's primary utility equipment started many fires last week, when high winds blasted through drought-stricken grasslands, as had been expected for days. While the still-burning Makawao fire had nothing to connect with the inferno that raged into Lahaina, it was one among numerous fires that broke out on August 7 and 8.

At least one of those burst, contributing to the raging fire that engulfed Lahaina, overpowering residents, tourists, and firefighters. As of Tuesday (8/15/2023), 99 persons had been confirmed dead in the deadliest wildfire in the United States in almost a century, and teams had only examined 25% of the destroyed communities.

"This is strong confirmation — based on real data — that utility grid faults were likely the ignition source for multiple wildfires on Maui," said Bob Marshall, founder and CEO of Whisker Labs, which has 78 sensors across Maui and is part of a robust network of hundreds of thousands monitoring grids across the US.

Last Monday (8/7/2023) night, as Upcountry conservationists attempted to preserve endangered birds, the fire quickly spread across Kula's meadows, dense trees, dead limbs, and eucalyptus-flecked gulches. A few hours later, another fire broke out near an electrical substation in Lahaina, across the island.

The utility has been under fire for days after The Washington Post reported that, despite warnings, the business did not cut power in advance of the wind storm to avoid causing wildfires. It had not implemented a power shut-off strategy, as have many utilities in California and other states.

Nina Rivers, who lives near the bird sanctuary, said she and her neighbors sent the utility emails and videos of low-hanging power lines in trees. She stated that there had been previous fires caused by electrical equipment, but that rescuers had always extinguished them swiftly.

On August 8, some 38 miles distant from the bird sanctuary, in Lahaina, a young woman called La'i awoke unexpectedly around 3 a.m. Something light had flashed outside her second-story window, emanating from power poles and the Hawaiian Electric substation just up the hill from her family's home on Lahainaluna Road. Then everything went dark again. La'i, whose parents asked that her full name not be revealed, couldn't believe how fierce the wind sounded before falling asleep.

The flash was also captured by Whisker Labs' seven sensors in Lahaina, which showed two large faults at 2:44 a.m. and 3:30 a.m. They were two of 34 problems that occurred between 11:38 p.m. on Monday and 5 a.m. on Tuesday. When the voltage on a power line dips, it signifies that "a lot of energy was dissipated somewhere that is not typical," according to Marshall. This discharge indicates that sparks could be flying through the air and landing on dry trees and grasses.

"It is unambiguous that Hawaiian Electric's grid experienced immense stress for a prolonged period of time," Marshall said, comparing the changing data to the grid's consistent measurements from weeks and months before. "There were dozens upon dozens of major faults on the grid, and any one of those could have been the ignition source for a fire."

Carly Agbayani, across the street, awoke around 5 a.m. sweating next to her husband. She instantly realized that her air conditioning was turned off and her lights were not working.

The 46-year-old hotel worker was buffeted by high, dusty gusts as she walked outside her home, which faces an empty lot, another home, and the Hawaiian Electric power plant. Her air conditioner began to run again at 6 a.m., she recalled. Two other residents recall their systems going back online, at least for a short while.

According to sensor data, power was restored temporarily in areas of Lahaina from 6:10 a.m. to 6:39 a.m. before going out again. And that's a concern, according to wildfire and energy experts, because de-energized lines "were all of a sudden a potential new source of fire in the community," said Michael Wara, director of Stanford University's Climate and Energy Policy Program.

Marshall stated that the electrical grid was clearly experiencing major problems at this point. The question is how much Hawaiian Electric was aware of these issues and what, if anything, it did with that knowledge.

How to prevent wildfires as the one in Maui (Hawaii): "need Prognostic Models for Prediction, "Dr. John Wang said. Every year, forest fires inflict significant losses all around the world. A good forecast of fire spread is critical for minimizing the devastation caused by these threats. The prediction technique is connected with a wind field model and a weather forecast model, allowing the system to dynamically adapt to complicated terrains and dynamic situations.

Power lines likely caused Maui’s first reported fire in Hawaii

By: John X. Wang

Subjects: Disaster Planning & Recovery

A security camera at the Maui Bird Conservation Center captured a brilliant flash in the woods around 10:47 p.m. last Monday, illuminating the trees swinging in the wind. "I believe that is when a tree falls on a power line," Jennifer Pribble, a senior research coordinator at the institution, says in an Instagram video.

“The power goes out, our generator kicks in, the camera comes back online, and then the forest is on fire.”

Although no one reason has been identified, experts believe that active electricity lines that toppled in severe winds may have caused a wildfire that eventually devastated Lahaina. On August 8, brush fires were already raging on Maui and the island of Hawaii.

Maui County officials assured residents that morning that a small brush fire in Lahaina had been extinguished. Still, they later issued an advisory describing "an afternoon flare-up" that necessitated evacuations.

The fires on the islands were fueled by a mix of low humidity and strong mountain winds caused by Hurricane Dora, a Category 4 storm in the Pacific Ocean hundreds of miles to the south.

Drought conditions have likely worsened in recent weeks as well. According to the U.S. Drought Monitor, about 16% of Maui County was under extreme drought a week ago.

Having power equipment that could not withstand heavy winds and keeping power lines electrified despite warnings of high winds is a major issue causing the wildfire.

It adds to evidence that the state's primary utility equipment started many fires last week, when high winds blasted through drought-stricken grasslands, as had been expected for days. While the still-burning Makawao fire had nothing to connect with the inferno that raged into Lahaina, it was one among numerous fires that broke out on August 7 and 8.

At least one of those burst, contributing to the raging fire that engulfed Lahaina, overpowering residents, tourists, and firefighters. As of Tuesday (8/15/2023), 99 persons had been confirmed dead in the deadliest wildfire in the United States in almost a century, and teams had only examined 25% of the destroyed communities.

"This is strong confirmation — based on real data — that utility grid faults were likely the ignition source for multiple wildfires on Maui," said Bob Marshall, founder and CEO of Whisker Labs, which has 78 sensors across Maui and is part of a robust network of hundreds of thousands monitoring grids across the US.

Last Monday (8/7/2023) night, as Upcountry conservationists attempted to preserve endangered birds, the fire quickly spread across Kula's meadows, dense trees, dead limbs, and eucalyptus-flecked gulches. A few hours later, another fire broke out near an electrical substation in Lahaina, across the island.

The utility has been under fire for days after The Washington Post reported that, despite warnings, the business did not cut power in advance of the wind storm to avoid causing wildfires. It had not implemented a power shut-off strategy, as have many utilities in California and other states.

Nina Rivers, who lives near the bird sanctuary, said she and her neighbors sent the utility emails and videos of low-hanging power lines in trees. She stated that there had been previous fires caused by electrical equipment, but that rescuers had always extinguished them swiftly.

On August 8, some 38 miles distant from the bird sanctuary, in Lahaina, a young woman called La'i awoke unexpectedly around 3 a.m. Something light had flashed outside her second-story window, emanating from power poles and the Hawaiian Electric substation just up the hill from her family's home on Lahainaluna Road. Then everything went dark again. La'i, whose parents asked that her full name not be revealed, couldn't believe how fierce the wind sounded before falling asleep.

The flash was also captured by Whisker Labs' seven sensors in Lahaina, which showed two large faults at 2:44 a.m. and 3:30 a.m. They were two of 34 problems that occurred between 11:38 p.m. on Monday and 5 a.m. on Tuesday. When the voltage on a power line dips, it signifies that "a lot of energy was dissipated somewhere that is not typical," according to Marshall. This discharge indicates that sparks could be flying through the air and landing on dry trees and grasses.

"It is unambiguous that Hawaiian Electric's grid experienced immense stress for a prolonged period of time," Marshall said, comparing the changing data to the grid's consistent measurements from weeks and months before. "There were dozens upon dozens of major faults on the grid, and any one of those could have been the ignition source for a fire."

Carly Agbayani, across the street, awoke around 5 a.m. sweating next to her husband. She instantly realized that her air conditioning was turned off and her lights were not working.

The 46-year-old hotel worker was buffeted by high, dusty gusts as she walked outside her home, which faces an empty lot, another home, and the Hawaiian Electric power plant. Her air conditioner began to run again at 6 a.m., she recalled. Two other residents recall their systems going back online, at least for a short while.

According to sensor data, power was restored temporarily in areas of Lahaina from 6:10 a.m. to 6:39 a.m. before going out again. And that's a concern, according to wildfire and energy experts, because de-energized lines "were all of a sudden a potential new source of fire in the community," said Michael Wara, director of Stanford University's Climate and Energy Policy Program.

Marshall stated that the electrical grid was clearly experiencing major problems at this point. The question is how much Hawaiian Electric was aware of these issues and what, if anything, it did with that knowledge.

Dr. John Wang's contributions to System Engineering, Information Science, Applied Statistics, and Industrial Engineering

By: John X. Wang

Subjects: Engineering - General

Dr. John Wang's outstanding contributions to System Engineering, Information Science, Applied Statistics, and Industrial Engineering

-

- created the World's first paper

to appy Information Science to Fault Diagnosis based on Fault Tree

Analysis, a tool widely in industries and academic

researches.

- defined Fault Diagnosis as a process of reducing Information Uncertainty.

- created the World's first paper

to appy Information Science to Fault Diagnosis based on Fault Tree

Analysis, a tool widely in industries and academic

researches.

-

- developed a Optimal inspection

sequence in fault diagnosis based on Fault Tree Analysis with

applications to Risk-Based Inspections in Electic Utility Industry,

Power Genration Industries (Oil & Gas, Hydraulic, and Nuclear

Power, etc.), and Chemical Industries.

- led to follow-up Ph.D. dissertation at Delft University of Technology (TuDelft in The Netherlands).

- developed a Optimal inspection

sequence in fault diagnosis based on Fault Tree Analysis with

applications to Risk-Based Inspections in Electic Utility Industry,

Power Genration Industries (Oil & Gas, Hydraulic, and Nuclear

Power, etc.), and Chemical Industries.

-

Research paper,

coauthored with Rune Reinertsen, Norwegian University of Science

and Technology (NUNT) in Trondheim, General inspection strategy for

fault diagnosis—minimizing the inspection costs, Mar 1, 1995,

Elsevier

- applied to North Sea oil exploration, Norway's oil & gas drilling, Norway's Offshore Energy including Oil, Gas, Wind, and Renewables, Norway's petroleum industry, and Norway's World’s First Commercial Deep-Sea Mining Project.

-

Research paper, COMPLEXITY AS A MEASURE OF THE DIFFICULTY OF SYSTEM

DIAGNOSIS, International Journal of General Systems · Mar 1, 1996,

Taylor & Francis

- established the relationship

between thermodynamics and engineering

systems.

- mathematically derived an entropy

function for System Safety and Reliability based on the

relationship above.

- laid the foundation for Wang entropy, being widely applied in high-speed railways, autonomous vehicles, autonomous driving, electric vehicles, aerospace, space, aviation, avionics, medical devices/healthcare, Artificial Intelligence (AI), machine learning, deep learning, etc.

- mathematically derived an entropy

function for System Safety and Reliability based on the

relationship above.

- established the relationship

between thermodynamics and engineering

systems.

-

Book as the first

author; coauthor: Marvin L. Roush, What Every Engineer Should Know

About Risk Engineering and Management, CRC Press/Marcel Dekker,

Inc. · Feb 15, 2000

- Created risk Engineering as a new

engineering discipline.

- introduced Risk Engineering into engineering education.

- Created risk Engineering as a new

engineering discipline.

-

Book, What Every

Engineer Should Know About Decision Making, CRC Press/Marcel

Dekker, Inc. · Jul 1, 2002

- formulated the problems of high-stakes engineering decision-making in the investigative and corporate sectors as problems of optimizing engineering decision variables to maximize payoff based on values, utility functions, and preferences.

-

Book, Engineering Robust Designs with Six Sigma, Prentice Hall · Mar 7, 2005

- developed a systematic process with eight steps to maximize reliability, efficiency, flexibility, and affordability.

-

Book, Industrial Design Engineering: Inventive Problem Solving. CRC Press · Feb 9, 2017

- developed the World's first book

on Industrial Design Engineering.

- featured as ISE Magazine's May 2017 Book of the Month.

- developed the World's first book

on Industrial Design Engineering.

-

Research paper, Complexity as a Measure of the Difficulty of System Diagnosis in Next-Generation Aircraft Health Monitoring System, SAE Technical Paper, 2019-01-1357

- applied Wang entropy to minimize the Difficulty of System Diagnosis in Next-Generation Aircraft Health Monitoring Systems to minimize the average number of airborne inspections to find the combination of component failures causing the observed symptoms of system failure.

-

Book, 2nd Edition What Every Engineer Should Know About Risk Engineering and Management. CRC Press · July 31, 2023

-

Authored the world’s first book on Risk Engineering of Artificial Intelligence (AI)/Machine Learning/Deep Learning:

-

Extended research work based on Wang entropy, which is being widely applied in high-speed railways, autonomous vehicles, autonomous driving, electric vehicles, aerospace, space, aviation, avionics, medical devices/healthcare, Artificial Intelligence (AI), machine learning, deep learning, etc.

-

-

Videos

Published: Feb 04, 2023

I heard she is oceans away I set out on my way I followed the scenic road With her breath in my dreams

Published: Feb 03, 2023

Lyrics that I created for my novel "Are You Afraid of Love?". Link: https://authors.taylorandfrancis.com/news/8123 I created the lyrics based on my poem in Poetry Quarterly: The Winter/Summer 2012 edition. Link: https://authors.taylorandfrancis.com/news/8017

Published: Apr 06, 2015

Bob Willis reviewed Dr. John X. Wang book "Green Electronics Manufacturing: Creating Environmental Sensible Products," which Dr. Wang dedicated to his "home by the green woods of Michigan," on his YouTube page.